Few-camera Dynamic Scene Variational Novel-view Synthesis

Résumé

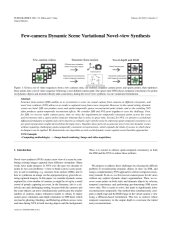

Few-camera videos Structure from motion Novel depth and RGB Relaxed point reconstruction without temporal consistency Efficient pose estimation robust to dynamic objects Sparse noisy point clouds Camera poses Virtual camera path Variational optimization with temporal consistency Figure 1: Given a set of video sequences from a few cameras only, our method computes camera poses and sparse points, then optimizes those points into a novel video sequence following a user-defined camera path. Our space-time SfM relaxes temporal consistency for points on dynamic objects and instead robustly adds consistency during the novel view synthesis via our variational formulation.

Fichier principal

JFIG2020___Consistent_Depth_Estimation_for_Dynamic_Scene_Multi_view_Synthesis (1).pdf (22.81 Mo)

Télécharger le fichier

JFIG2020___Consistent_Depth_Estimation_for_Dynamic_Scene_Multi_view_Synthesis (1).pdf (22.81 Mo)

Télécharger le fichier

| Origine | Fichiers produits par l'(les) auteur(s) |

|---|